XGBoost: A Scalable Tree Boosting System

XGBoost is an optimized distributed gradient boosting system designed to be highly efficient, flexible and portable. It implements machine learning algorithms under the Gradient Boosting framework. XGBoost provides a parallel tree boosting(also known as GBDT, GBM) that solve many data science problems in a fast and accurate way. The same code runs on major distributed environment(Hadoop, SGE, MPI) and can solve problems beyond billions of examples. The most recent version integrates naturally with DataFlow frameworks(e.g. Flink and Spark).

Reference Paper

- Tianqi Chen and Carlos Guestrin. XGBoost: A Scalable Tree Boosting System. Preprint.

Technical Highlights

- Sparse aware tree learning to optimize for sparse data.

- Distributed weighted quantile sketch for quantile findings and approximate tree learning.

- Cache aware learning algorithm

- Out of core computation system for training when

Impact

- XGBoost is one of the most frequently used package to win machine learning challenges.

- XGBoost can solve billion scale problems with few resources and is widely adopted in industry.

- See XGBoost Resources Page for a complete list of usecases of XGBoost, including machine learning challenge winning solutions, data science tutorials and industry adoptions.

Acknowledgement

XGBoost open source project is actively developed by amazing contributors from DMLC/XGBoost community.

This work was supported in part by ONR (PECASE) N000141010672, NSF IIS 1258741 and the TerraSwarm Research Center sponsored by MARCO and DARPA.

Resources

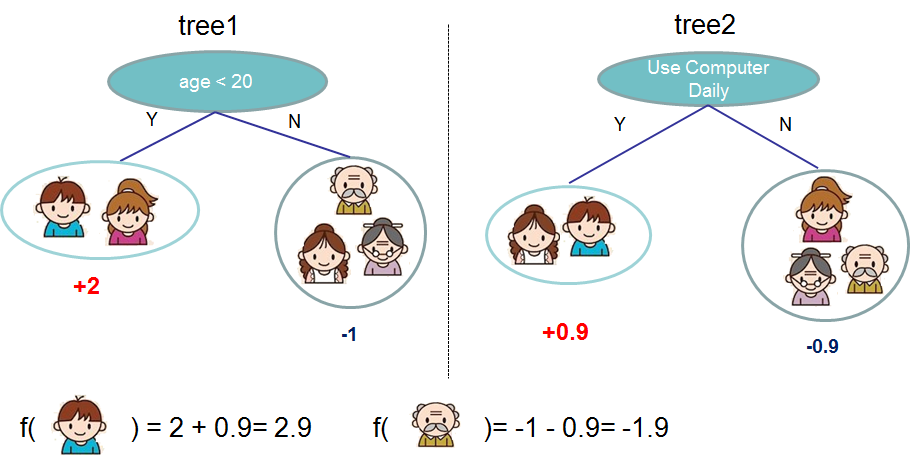

- Tutorial on Tree Boosting [Slides]

- XGBoost Main Project Repo for python, R, java, scala and distributed version.

- XGBoost Julia Package

- XGBoost Resources for all resources including challenge winning solutions, tutorials.